[ad_1]

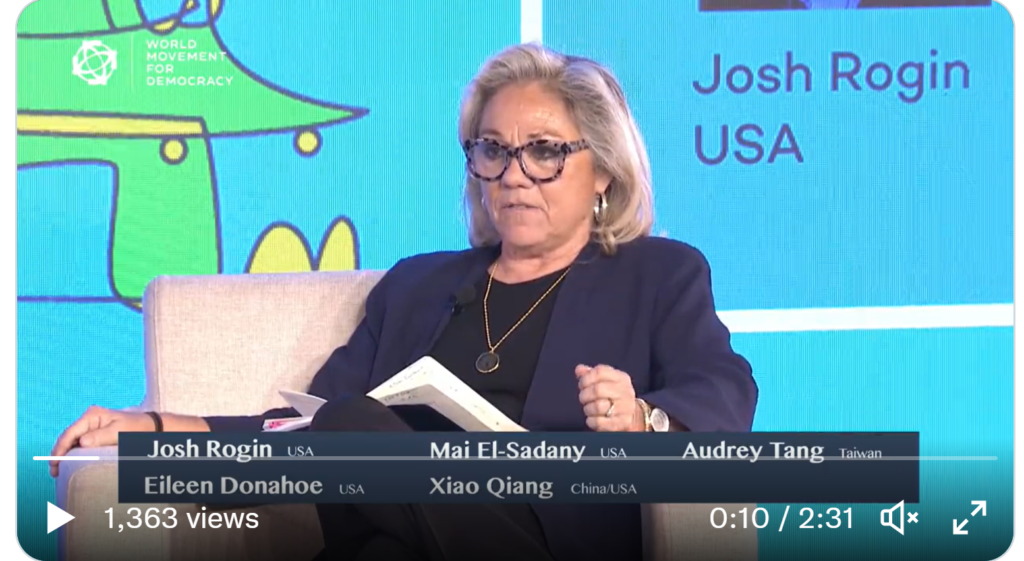

Synthetic intelligence has “turbo-charged” current types of repression, giving authoritarian regimes disturbing new “social engineering instruments” to watch and form citizen habits, says Stanford College’s Eileen Donahoe.

“We’re in a really darkish place,” she instructed the World Motion for Democracy’s eleventh Meeting, as authoritarians aren’t solely exporting repressive applied sciences, however diffusing concepts, norms and narratives inside worldwide boards in an effort to form the long run world info order, presenting an “existential risk” to democracy.

“It’s essential to acknowledge digital authoritarianism as a phenomenon that’s about greater than the repressive utility of tech,” stated Donahoe, a Nationwide Endowment for Democracy (NED) board member. “Now we have to see it instead mannequin of governance that’s spreading world wide, competing with democracy,” she instructed the Publish’s Josh Rogin.

Xiao Qiang (above), the founder and editor in chief of China Digital Occasions, concurs.

Digital authoritarianism will not be “some distant dystopian factor,” he instructed the meeting. “The surveillance, the management, the coercion, and the know-how are details of life for Chinese language residents at the moment. Large knowledge and AI are centralized, extremely environment friendly types of management.”

However AI will be designed and applied in a approach that’s inclusive, accountable, dependable and truthful, argues Nanna-Louise Wildfang Linde, Microsoft’s new VP of European Authorities Affairs. The warfare in Ukraine exhibits “how excessive the stakes are [and].. the necessity to act now as a collective,” she provides, insisting that know-how can improve safety towards cyber threats, defend election integrity and mitigate the specter of misinformation.

Traditionally, democracies have been higher ready to make use of info than autocracies as a result of it was out there to everybody and extra minds may course of it, argues historian Yuval Noah Harari. Democracy has been the exception, not the norm all through historical past, he cautions. Sure technological circumstances are conducive to democracy, however AI [the subject of a recent NED Forum report – right] is prone to favor extremely centralized regimes, he claims.

Traditionally, democracies have been higher ready to make use of info than autocracies as a result of it was out there to everybody and extra minds may course of it, argues historian Yuval Noah Harari. Democracy has been the exception, not the norm all through historical past, he cautions. Sure technological circumstances are conducive to democracy, however AI [the subject of a recent NED Forum report – right] is prone to favor extremely centralized regimes, he claims.

Do the ends justify the means when coping with disinformation? asks Civic Media Observatory, reporting on how Brazil is dealing with ‘digital militias’ forward of the presidential election.

“I come from a area the place the web and tech have been a phenomenal factor,” stated Mai El-Sadany, a Human Rights Lawyer with the Tahrir Institute for Center East Coverage. It supplied “an area the place we’ve discovered from one another” and exchanged concepts and data. However governments have intervened and sought to regulate it, she instructed the World Motion Meeting.

Democratic engagement is the important thing to addressing know-how’s threats to democracy, stated Taiwan’s “digital minister” Audrey Tang (proper).

Democratic engagement is the important thing to addressing know-how’s threats to democracy, stated Taiwan’s “digital minister” Audrey Tang (proper).

“The key is to be taught with the individuals, to take part, develop belief in all components of the method by studying interact overtly and positively whereas celebrating commonality and consensus as a substitute of getting misplaced within the thickets of battle,” she instructed the meeting.

Expertise is a operate of this underlying system of oppression geared to cement China’s management of Xinjiang, which has turn out to be a logo of the state’s omnipotence and the assumption by sure governments that they will use no matter means essential to subjugate their residents, notes Carnegie analyst Steve Feldstein:

Xinjiang stands as a warning about what the street to impunity appears like. However additionally it is essential to not be overly deterministic concerning the function of know-how in fostering repression—whether or not in Xinjiang or elsewhere. The overriding motivation guiding China’s insurance policies within the area is a dedication to crushing dissent within the Uyghur group. Even when China lacked entry to synthetic intelligence know-how or facial recognition cameras, there may be little doubt its safety forces would discover alternate means to subdue residents within the area.

U.S. legislators are proposing steps to counter the affect of international adversaries on the US telecommunications infrastructure, studies recommend:

The International Adversary Communications Transparency (FACT) Act would supply important telecommunications transparency by requiring the Federal Communications Fee (FCC) to publish a listing of corporations that maintain FCC authorizations, licenses, or different grants of authority with over 10 p.c or extra possession by international adversarial governments – together with China, Russia, Iran, or North Korea.

[ad_2]

Source link