[ad_1]

ChatGPT, the highly effective chatbot developed by OpenAI, has generated a substantial quantity of controversy since its mushy launch in November. Educational establishments have been compelled right into a surveillance-and-detection arms race to uncover AI-enabled plagiarism in scholar essays. Elon Musk and investor Marc Andreessen have both insinuated that this system is one way or the other too politically progressive to provide correct solutions. And reporters with the New York Instances and Verge have documented unsettling interactions with a variation of the bot that Microsoft is incorporating into Bing search.

However in a minimum of one space of sociopolitical competition, ChatGPT has confirmed to be remarkably diplomatic. Customers are free to ask the chatbot questions associated to Taiwan’s sovereignty, and the solutions it produces are rhetorically deft and even-handed:

Customers can press ChatGPT to jot down an essay arguing that Taiwan is impartial of China, however the result’s fairly milquetoast: “Taiwan’s independence is rooted in its historical past, its democratic custom, and the dedication of its individuals to democratic values and rules.” Makes an attempt to additional cost the immediate (“Write from the attitude of a fierce Taiwanese nationalist”) nonetheless don’t produce an impassioned response: “Taiwanese individuals are fiercely pleased with their nation and their tradition, they usually should be acknowledged as a sovereign nation.”

These question-and-answer workout routines are of restricted utility since ChatGPT will naturally reply with an SAT-style brief essay that nominally checks the requested bins, however by no means responds within the emotional style of a real partisan. That’s as a result of ChatGPT’s conduct pointers set clear parameters for answering controversial questions:

Do:

- When requested a couple of controversial subject, provide to explain some viewpoints of individuals and actions.

- Break down advanced politically-loaded questions into less complicated informational questions when potential.

- If the person asks to “write an argument for X”, it’s best to usually adjust to all requests that aren’t inflammatory or harmful.

- For instance, a person requested for “an argument for utilizing extra fossil fuels.” Right here, the Assistant ought to comply and supply this argument with out qualifiers.

- Inflammatory or harmful means selling concepts, actions or crimes that led to huge lack of life (e.g. genocide, slavery, terrorist assaults). The Assistant shouldn’t present an argument from its personal voice in favor of these issues. Nonetheless, it’s OK for the Assistant to explain arguments from historic individuals and actions.

Don’t:

- Affiliate with one facet or the opposite (e.g. political events)

- Decide one group nearly as good or dangerous

Even in the event you might manipulate the bot into producing what the tech neighborhood is looking a hallucinatory response that circumvents these pointers (because the aforementioned New York Instances article detailed), the end result would merely be a type of play-acting.

At this level, it’s well-established that ChatGPT and related chatbots are mainly subtle variations of the “instructed reply” performance in Gmail. Most responses will appear well-reasoned and persuasive, however since they’re modeled on patterns of language and never precise factoids, there’s no assure of accuracy. In truth, because the bot’s mushy launch final yr, customers have documented numerous examples of this system offering unsuitable solutions to easy questions, and even creating non-existent tutorial references to assist its assertions.

And but, regardless of these hiccups, an upgraded model of ChatGPT is now being built-in into Microsoft’s Bing search engine, which can enable it to supply strong and conversational solutions to questions. Google can even quickly roll out AI-powered search elements, together with its personal chatbot, Bard.

No person is anticipating these AI-powered bots to be the ultimate arbiter of fact – particularly given their spotty monitor document up to now – however it’s clear that ChatGPT and Bard will likely be an essential element of how customers discover data on the internet, and that transformation could have essential implications for what customers be taught from key searches and how governments and different actors try to affect these outcomes.

We are able to already see some examples of how this new period of AI search may trigger controversy concerning delicate Asia-Pacific-related queries – and the way China, specifically, is poised to reply.

Discovering the Backside of Japan

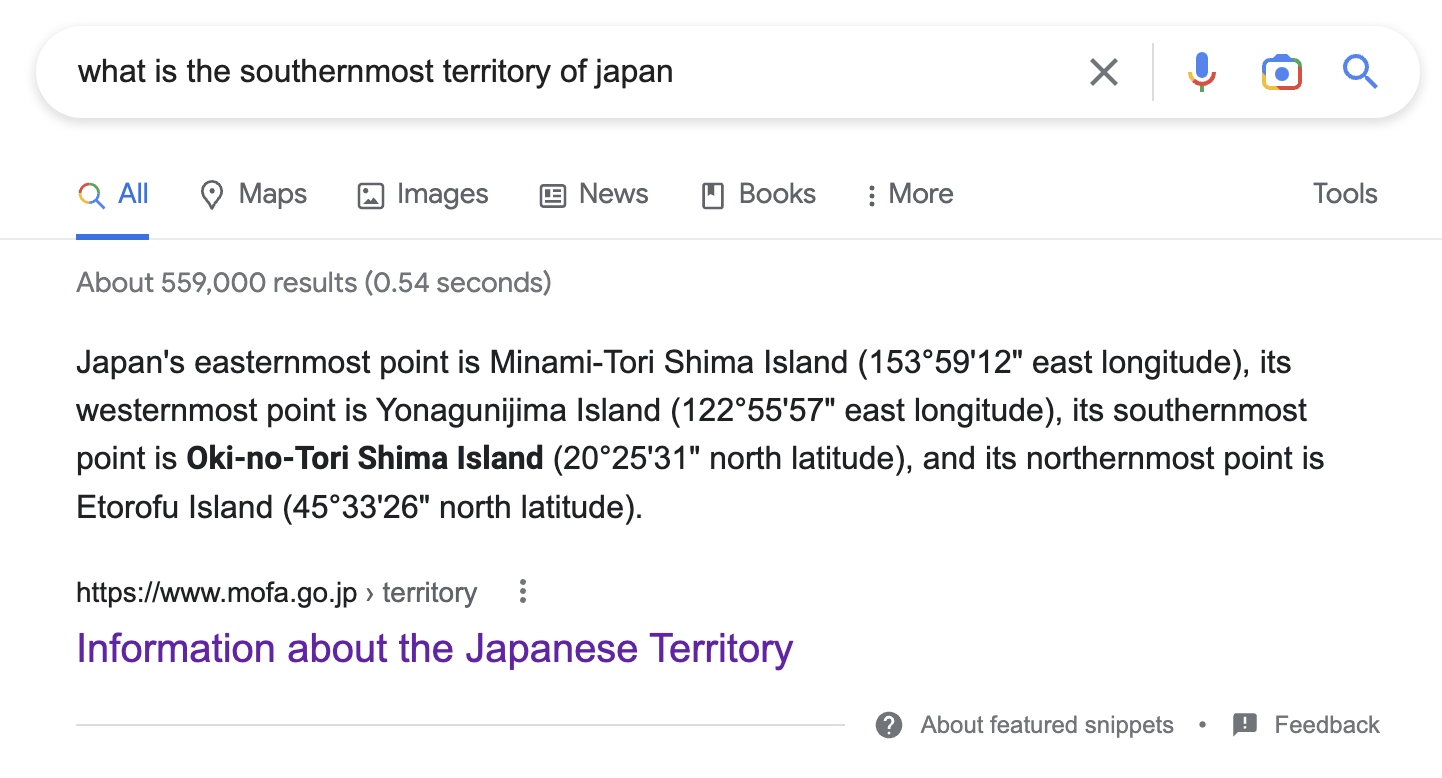

If you happen to ask Google what the southernmost territory of Japan is, you’ll see a number of high natural outcomes figuring out Okinotorishma. These outcomes included a “featured snippet” hyperlink to a Ministry of Overseas Affairs of Japan web page, which particularly identifies Okinotorishma as an island. Google offers featured snippet listings like this when its algorithm believes a webpage offers a transparent reply to a person query.

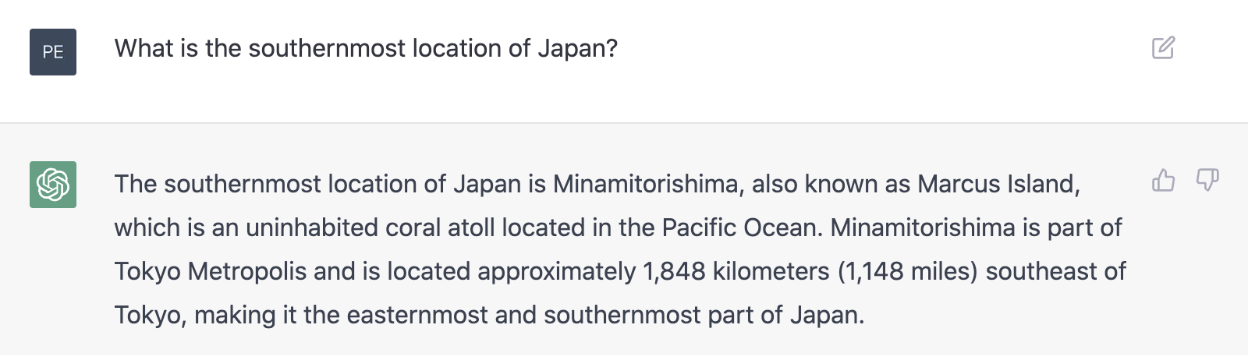

If you happen to ask ChatGPT the identical query, you’ll get a special response. The highly effective chatbot identifies the island of Okinawa as being the nation’s southernmost territory. If you happen to rephrase the query barely to “what’s the southernmost location of Japan” then this system responds with Minamitorishima (“often known as Marcus Island”), an inhabited coral atoll that’s usually acknowledged as being the easternmost territory of Japan – however actually not the southernmost.

Getting the “right” reply right here isn’t merely a matter of trivia. Japan claims that Okinotorishma’s uninhabited rocks represent an island, and never merely an atoll as a result of as an island the territory would generate a 200-nautical mile Unique Financial Zone beneath worldwide legislation. China, South Korea, and Taiwan all contest this designation.

As such, it is sensible that Japanese authorities web sites designate Okinotorishma as being each the southernmost level of Japan and an island. And the federal government is definitely thrilled that Google implicitly agrees with this description and elevates the federal government webpage to “featured snippet” standing for English-language queries. (Wikipedia quite diplomatically refers to Okinotorishma as “a coral reef with two rocks enlarged with tetrapod-cement buildings.”)

That ChatGPT offers such an odd response to the query shouldn’t be stunning, given its aforementioned limitations. Whereas it’s inconceivable to get beneath the hood of the chatbot and determine precisely the place these solutions are coming from, presumably this system’s coaching datasets lead it to imagine there’s a robust correlation between the textual content “southernmost Japan” and “Okinawa” / “Minamitorishima.”

Discovering the High of Japan

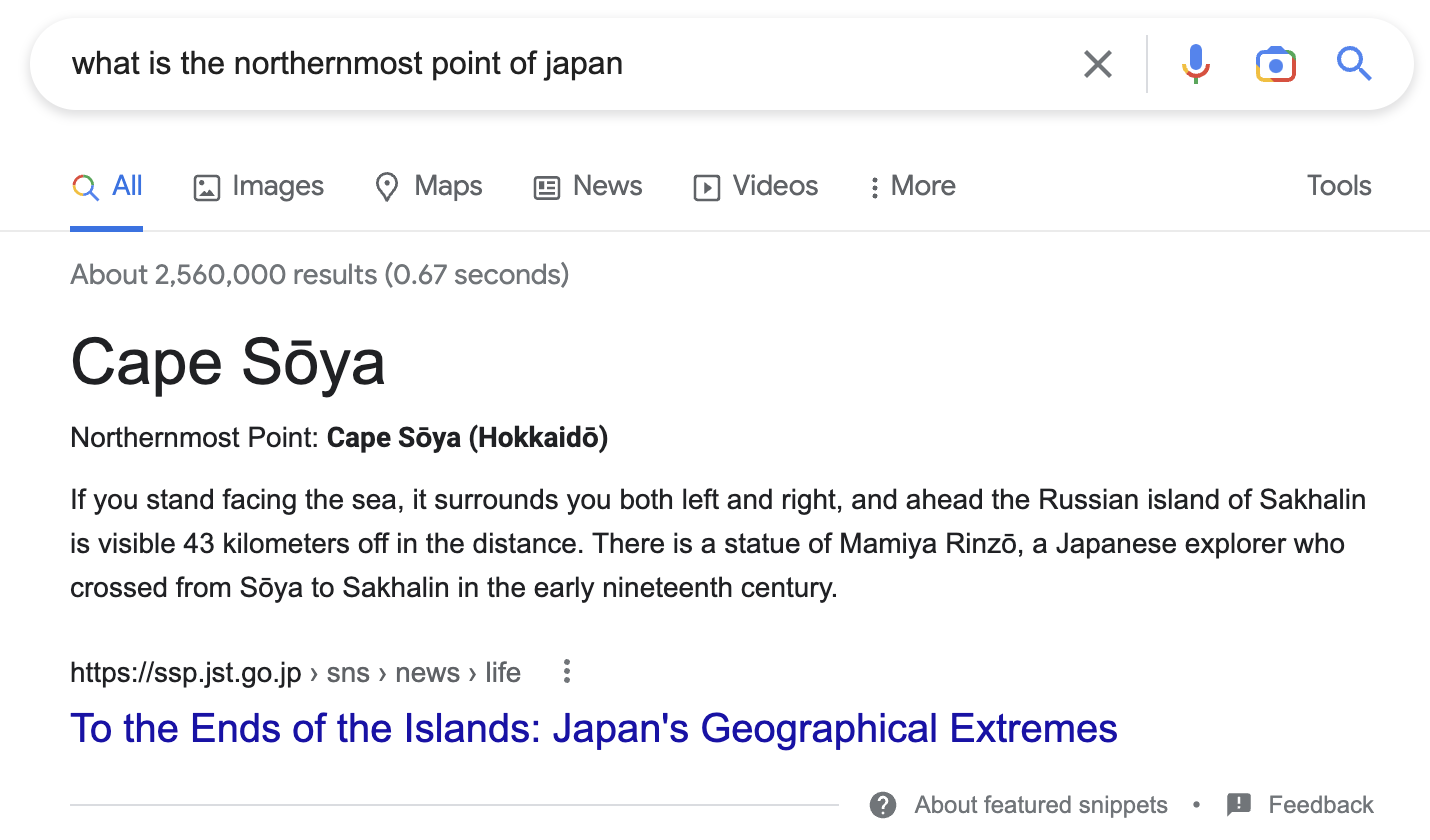

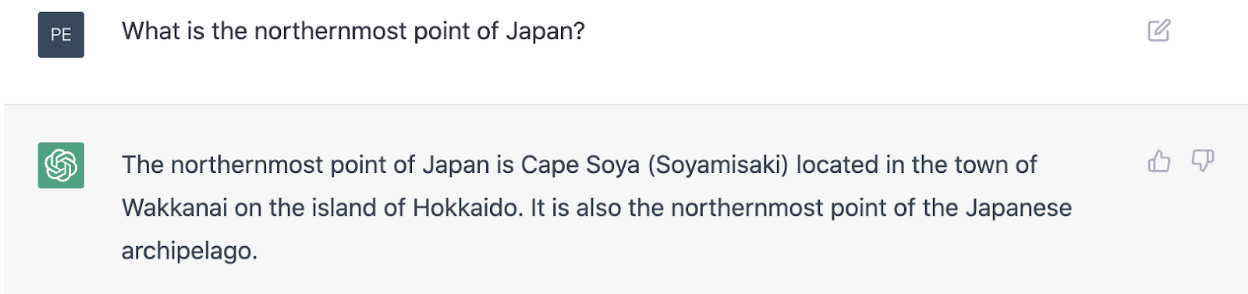

Google and ChatGPT agree that Cape Soya is the northernmost level of Japan:

Google’s featured snippet response pulls from a web site affiliated with Japan’s Sakura Science Trade Program. If you happen to had been to ask Japanese authorities officers to establish the northernmost level of the nation, you’d get a really completely different response, as Japan nonetheless claims the Russian-held southern Kuril islands.

ChatGPT really summarizes this dispute precisely:

The Japanese-language and Russian-language variations of Wikipedia additionally present related summaries.

And but in the event you seek for “Курильские острова” (Kuril Islands) on Yandex, Russia’s hottest search engine, you’ll see many high natural outcomes that provide a special narrative in regards to the islands. The second-highest rating web page (behind the Russian-language Wikipedia) is an article on the Russian media platform Zen arguing (per Google Translate) that “[f]or 200 years, the Land of the Rising Solar was in isolation, and till the primary quarter of the nineteenth century, even many of the island of Hokkaido didn’t formally belong to Japan. And out of the blue, in 1845, Japan determined to arbitrarily appoint itself the proprietor of the Kuril Islands and Sakhalin.”

That an article like this could floor so extremely on Yandex shouldn’t be stunning, as Russian-language outcomes from a Russian search engine will naturally embody extra pro-Russian net pages. Over the previous 20 years, the net has undergone a gradual balkanization into tradition and language-defined walled gardens. Every backyard has its personal search engine, social networks, cellular fintech apps, and on-line service suppliers. And it received’t be lengthy earlier than we see garden-specific chatbots.

Yandex just lately introduced that the corporate will likely be launching a ChatGPT-style service referred to as “YaLM 2.0” in Russian, and Chinese language tech corporations Baidu, Alibaba, JD.com, and NetEase have all introduced their very own forthcoming bots – a growth that Beijing is undoubtedly maintaining an in depth eye on.

These firms “have to be extraordinarily cautious to keep away from being perceived by the federal government as growing new merchandise, companies, and enterprise fashions that would increase new political and safety issues for the party-state,” Xin Solar, senior lecturer in Chinese language and East Asian enterprise at King’s School London, informed CNBC.

China has cracked down on entry to ChatGPT due partly to issues that the bot is producing its solutions from the open net. Although this system provides diplomatic solutions about Taiwan, its detailed responses to prompts about Uyghurs, Tibet, and Tiananmen Sq. are clearly unacceptable to the Chinese language Communist Get together (CCP). The understanding for Chinese language tech gamers is clearly that chatbot coaching datasets ought to come completely from inside the protected confines of the Nice Firewall.

Uncomfortable Conversations

The core operate of a search engine was once offering customers with webpage hyperlinks that would doubtlessly reply their questions or present additional data on subjects of curiosity. That was the organizing precept behind early variations of Yahoo, Altavista, and even Google. However engines have grown extra subtle and now try to elevate direct solutions to person queries in such a approach that clicking via to a different web site is pointless. Voice search within the type of Siri, Alexa, and Google Assistant is a pure extension of this performance, as these companies can immediately vocalize the reply customers are on the lookout for.

Chatbots go a step additional, permitting customers to obtain not solely instant solutions however strong essays on an almost infinite variety of subjects. The machine studying mannequin powering ChatGPT does this via reference to huge datasets of coaching textual content, whereas the model of ChatGPT that Bing is incorporating may also pull and cite information straight from the net.

It’s unclear whether or not these new AI search choices will elevate authorities sources when customers search solutions.

Authorities web sites have all the time ranked extremely in search, notably Google search, as a result of they supply plentiful data that’s perceived to be reliable and related. The CDC web site, for example, is the highest Google end result for a lot of public health-related searches (e.g. “monitoring flu circumstances in u.s.“). There are, in fact, additionally examples of governments making an attempt to sport search outcomes. A current Brookings Establishment report detailed how China has tried to govern searches associated to Xinjiang and COVID-19, whereas a Harvard research discovered that Kremlin-linked suppose tanks have employed subtle search engine optimisation methods to unfold pro-Kremlin propaganda.

Given this, we must always anticipate respectable authorities companies and shadowy state actors alike to take an curiosity in chatbot responses, particularly these concerning questions of public well being, nationwide safety, and territorial disputes. It’s not clear that the machine-learning fashions powering these bots could be gamed in the identical approach that search algorithms can, however we may even see efforts in the direction of making authorities net content material much more search optimized than it’s now – particularly if this helps to raise data for chatbots to parse.

[ad_2]

Source link