[ad_1]

OpenAI’s DALL-E took the world by storm earlier this 12 months when it launched its second iteration of its machine studying picture generator. Whereas it was initially made out there solely to a choose few, the corporate is now opening up a beta model of the system to the general public, however on a restricted foundation.

In a weblog put up, the corporate introduced that it will likely be inviting a million customers from its waitlist throughout the subsequent few weeks to have entry to DALL-E. It didn’t make clear whether or not it’ll selecting the primary a million to hitch or at random, so if you happen to nonetheless haven’t signed up, you’ll be able to put your self within the waitlist right here.

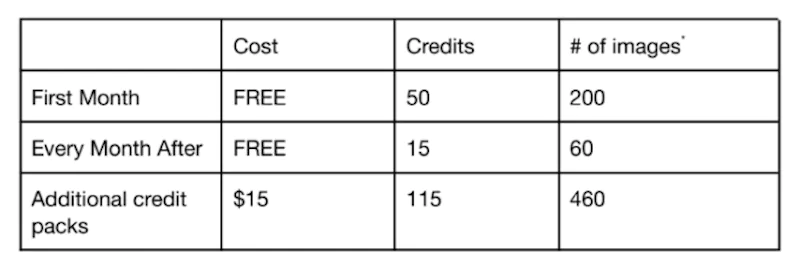

Every beta person will initially get 50 free credit for his or her first month after which 15 free credit each month after that. One credit score equals to 1 unique immediate era, which returns 4 pictures, or one edit or variation immediate that solely offers you three pictures. If you happen to run out of credit, you should buy an extra 115 credit for US$15 (~RM67) and for artists missing in funds, you may as well apply for subsidised entry.

ADVERTISEMENT

To increase on that, an unique immediate enables you to create any fantastical picture you’ll be able to suppose to write down, seemingly out of skinny air. Moreover, an edit will will let you make context-aware modifications to pictures that you’ve beforehand created utilizing DALL-E.

A variation, then again, will allow you to make totally different variations of the unique picture, which may both be generated or uploaded by the person. All generations made by DALL-E might be saved by the person in “My Assortment”. Furthermore, every person will get full utilization rights to commercialise the generated pictures together with the best to reprint, promote, and merchandise.

For the beta section, OpenAI made some modifications to DALL-E to enhance security. The AI rejects picture uploads with sensible faces and it additionally prevents the era of photorealistic faces. Violent, grownup, or political content material are additionally blocked from being generated, with each automated and human monitoring techniques carried out to forestall misuses.

(Supply: OpenAI)

[ad_2]

Source link